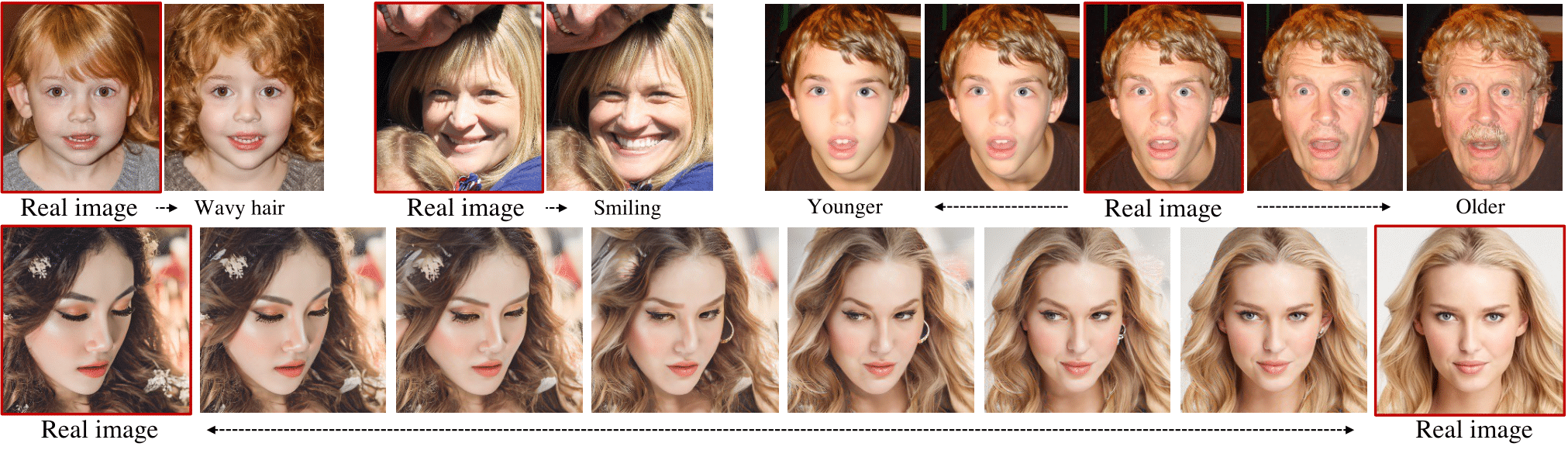

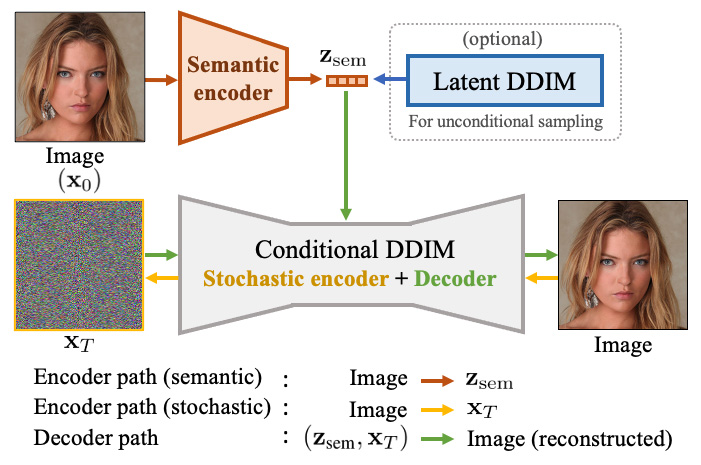

Diffusion probabilistic models (DPMs) have achieved remarkable quality in image generation that rivals GANs'. But unlike GANs, DPMs use a set of latent variables that lack semantic meaning and cannot serve as a useful representation for other tasks. This paper explores the possibility of using DPMs for representation learning and seeks to extract a meaningful and decodable representation of an input image via autoencoding. Our key idea is to use a learnable encoder for discovering the high-level semantics, and a DPM as the decoder for modeling the remaining stochastic variations. Our method can encode any image into a two-part latent code where the first part is semantically meaningful and linear, and the second part captures stochastic details, allowing near-exact reconstruction. This capability enables challenging applications that currently foil GAN-based methods, such as attribute manipulation on real images. We also show that this two-level encoding improves denoising efficiency and naturally facilitates various downstream tasks including few-shot conditional sampling. Our novel latent space is more readily discriminative than StyleGAN-W (via inversion) when used to encode real input images.

BibTex

@inproceedings{preechakul2021diffusion,

title={Diffusion Autoencoders: Toward a Meaningful and Decodable Representation},

author={Preechakul, Konpat and Chatthee, Nattanat and Wizadwongsa, Suttisak and Suwajanakorn, Supasorn},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022},

}

Special thanks to Google Conference Scholarships for conference travel support.